Hello, my dear blog readers! Why so serious? Take a breath, crack a smile, and get ready to learn something new in this post.

In this article, I will explain how I uncover most bugs following a thorough initial reconnaissance. I’m also excited to share some of my secrets with you.

Whenever I begin testing a new target—let’s use Thanos.com as an example—I start by launching Burp Suite, enabling my proxy, and logging my traffic into Burp Suite. I then explore the website, clicking on every link to understand the site’s functionality. This approach ensures that each endpoint is logged in the Burp Suite tree, aiding in my understanding of the application. As I navigate, links may redirect me to various subdomains like blog.thanos.com or shop.thanos.com, and I ensure all these subdomains are captured.

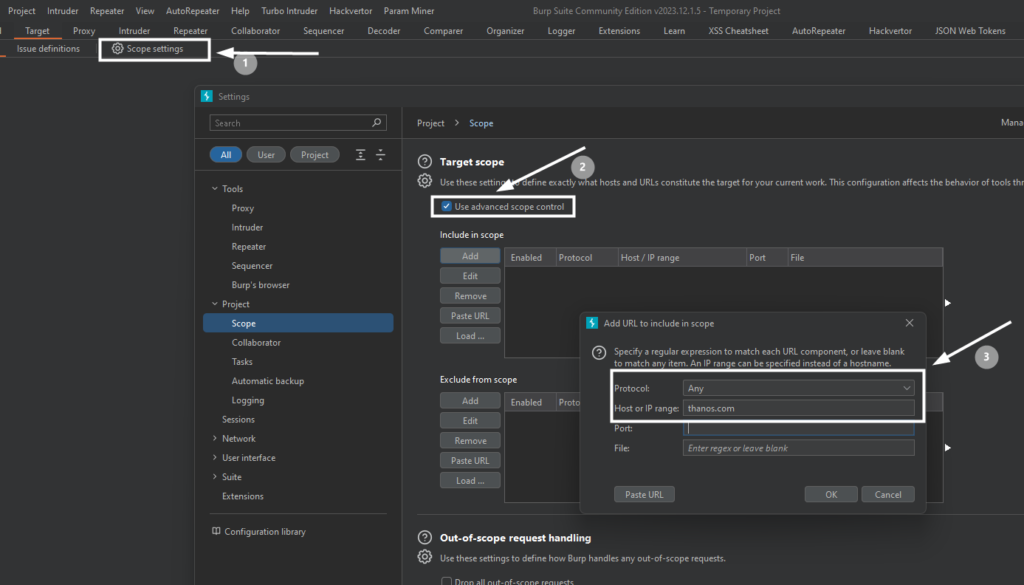

In Burp Suite, to facilitate this process, follow these steps:

Navigate to the Proxy > Scope settings > use advanced scope settings > enable any protocol > Enter the domain without HTTPS

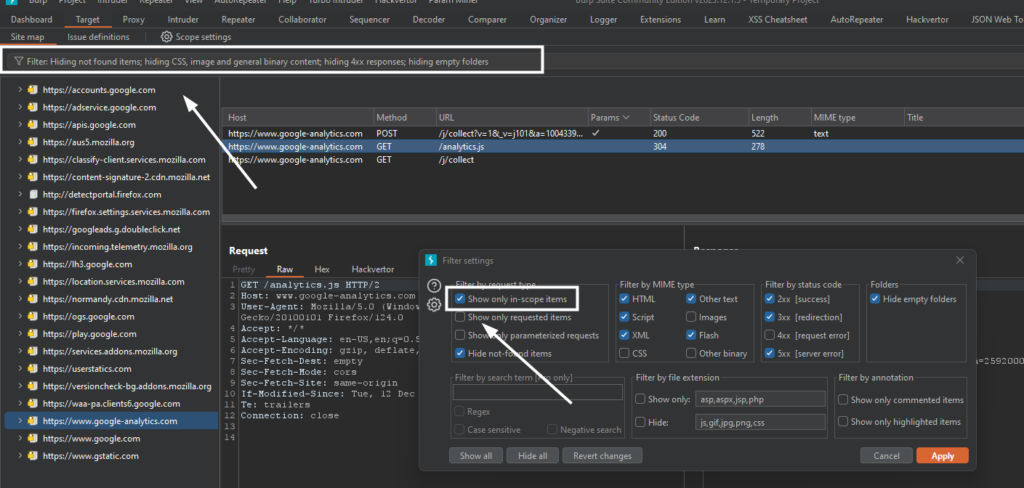

Then you can see on the site map that we will see a lot of junk traffic that we don’t need, we can make that site map clean by making some clicks

Go to the Target > sitemap > filter: hiding non-found items > show only in-scope items

With these settings, you can view all the traffic you’ve browsed in Burp Suite. Manual spidering, input testing, and server response analysis are time-consuming but essential for understanding the application’s handling of data. This process often reveals numerous subdomains, endpoints, and inputs. By testing these elements, you’re likely to find various vulnerabilities. For automated spidering, adjust the settings in Burp Suite for an appropriate number of requests per second. In newer versions without spidering, initiate a passive crawl for the target.

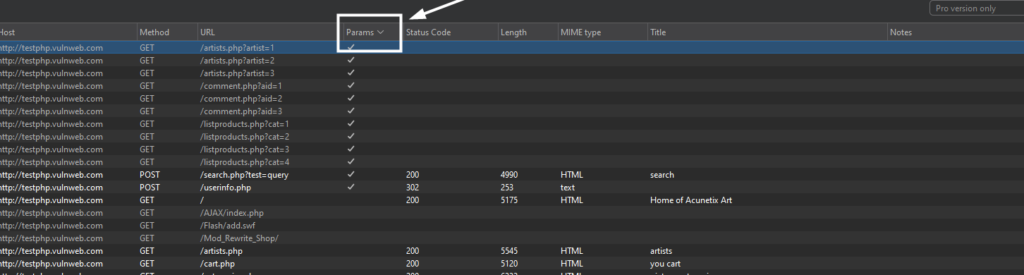

After completing these steps, you’ll have access to raw requests and parameters for each request, potentially amounting to thousands. To narrow your focus, filter for requests that contain parameters, which can eliminate 50%-70% of the total requests:

Navigate to the Target tab, then the sitemap, and double-click on params.

This process reveals a vast number of requests with parameters.

However, our search doesn’t end there. The number of parameters tested correlates directly with the number of bugs or vulnerabilities discovered.

Several tools, particularly on GitHub, are invaluable for finding URLs. Let’s use two notable tools: gau (get all URLs) and waybackurls. First, install gau on your computer and perform subdomain enumeration, saving all subdomains to a text file. Then, use the following command to leverage gau:

cat sub_domains.txt | gau –threads x –proxy your_burp_proxy

This command fetches URLs from various sources at an appropriate speed and sends them to the Burp proxy, where you’ll find a treasure trove of URLs and parameters. For URLs that cannot be sent through a proxy with waybackurls, save the output and then pass it to gau:

cat thanos.com | waybackurls > wayback_thanos.txt

cat wayback_thanos.txt | gau –proxy your_burp_proxy –threads x

At this stage, you’ll notice a significant increase in the number of URLs and parameters at your disposal. This is because we are deploying our tools—such as gau and waybackurls—in a strategic manner, both recursively and in parallel with Burp Suite’s automated spidering capabilities. This strategy forms an effective combination, enhancing our reconnaissance efforts.

Utilizing these tools recursively means that we feed the output of one tool back into our process, enriching the pool of discovered URLs and parameters with each iteration. In parallel, Burp Suite’s automated spidering works independently to map out the application by following links and analyzing the content of the web application it encounters. This dual approach ensures a thorough exploration of the target application’s surface.

Incorporating additional tools into this process could introduce complexity, but it also increases the breadth and depth of our search, leading to the discovery of more results. This includes uncovering hidden parameters and endpoints that might not be immediately visible. The synergy between manual strategies and automated tools expands our coverage, ensuring that we miss fewer potential vulnerabilities.

As we continue this combined approach, the collection of URLs and parameters will grow. Although it may take some time to process and analyze all the data, the effort is worthwhile. This comprehensive examination allows us to uncover a wide array of requests, which are essential for thorough testing. Each request and its parameters can then be scrutinized for potential vulnerabilities, enhancing the effectiveness of our security assessment.

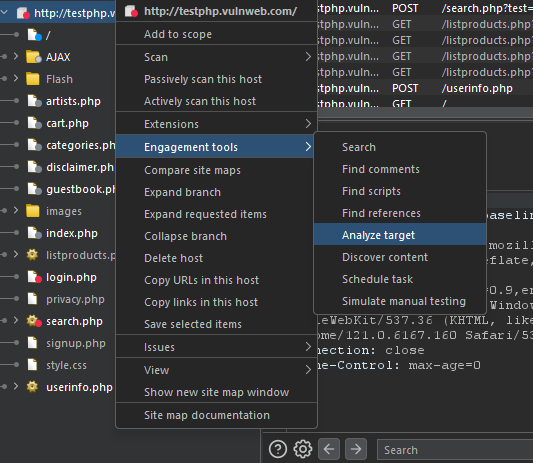

After a period of testing, you might notice you’re testing the same parameter multiple times. To address this, Burp Suite Professional offers a feature that streamlines the process. Simply right-click on the host in the target tab, select engagement tools, and then click on analyze target.

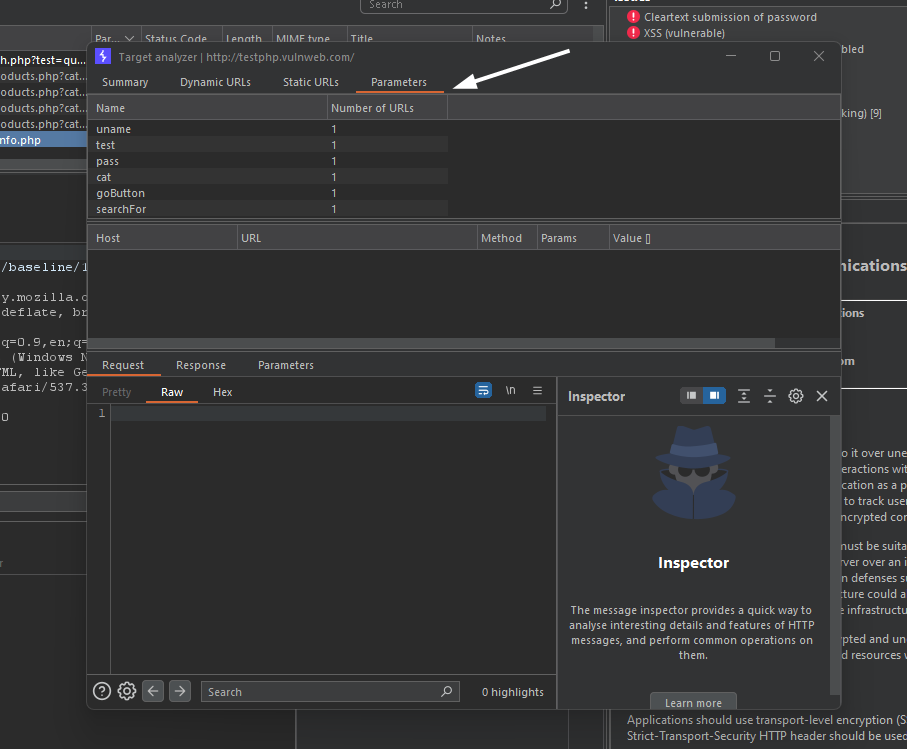

There, under the parameters tab, you’ll find all the unique parameters, allowing you to concentrate your testing efforts on specific parameters.

By employing these techniques in concert, we maximize our chances of identifying security weaknesses, thereby strengthening our overall security posture. Happy hunting!